2: Sentence Analysis – 'My Garmin is my body'

"Building on the exploration of AI's emotional comprehension, this film/artwork examines how AI processes physical and emotional connections. The phrase 'My Garmin is my body' is deconstructed using hand gestures, symbols, and sound to create a multimodal representation of AI's understanding."

Tools used to Visually interpret AI's Emotional Comprehension

- Hume AI: Analysing speech prosody to detect emotions in language.

- Poe: Interpreting emotions through shapes.

- Stable Audio: Translating emotional tones into sound.

- AI Generative Tools: Exploring hand gesture interpretations through various AI models.

Technological Exploration and Challenges

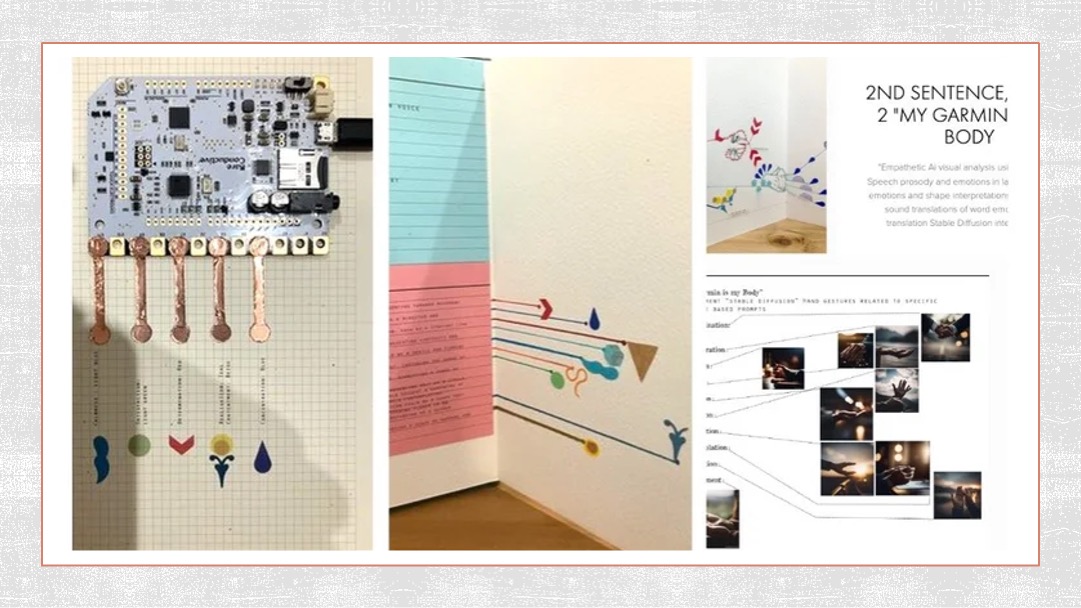

In my initial experiments, I explored merging interactive technology with visual art, using the Bare Conductive Touch Board, a projector, and electric paint to trigger animations based on physical touch.

What happened?

At this stage, I was really just experimenting. I was trying to develop a visual language, and I thought, "Why not use my animation skills?" That's when I discovered projection mapping, which seemed like a perfect fit. The idea was that, with just a click of a touch sensor, it would trigger an animation that represented a specific emotion—each gesture or symbol mimicking the emotion it was tied to.

I had a basic projector at the time, and, frankly, the results weren't perfect. The concept looked okay, but the projection itself just wasn't powerful enough. It was exciting to see something happen when you clicked a button, but it didn't have the visual impact I knew I could generate with my own digital animations.

However, I really liked the touch sensors. They worked well and allowed me to translate sound into visual prompts from the chatbot itself. That's when things clicked. Not only could a chatbot engage in emotional conversation with a human, but it could also interpret emotions through sound in a really interesting way.

That was a pivotal moment for me—this realization that emotional communication could go beyond just language. It could be conveyed through sensory and auditory experiences as well, adding layers to how AI might truly understand and engage with emotions.