Further to this investigation, Arunav pointed out to what extent does this chatbot use both extracted information and generative AI. As there didn't seem to be any information supporting the bot's training. It was evident that although literal the creative outputs it didn't always lead to the same response. Upon closer inspection, this specific bot had a mix of extracted (rules-based) and generative training, contradicting the careful rules-based approach that official developers of mental health bots have been cautious about introducing.

The original Woebot, created in 2017, was discontinued in April 2024. This particular-health bot did not include generative AI features and was purely a rule-based chatbot due to concerns about potential hallucinations and inappropriate results for vulnerable patients. Woebot was the official regulated health bot. However, in 2024, clinical trials were being conducted to integrate generative AI to assess its feasibility and implementation. Currently, there is no available version of it.

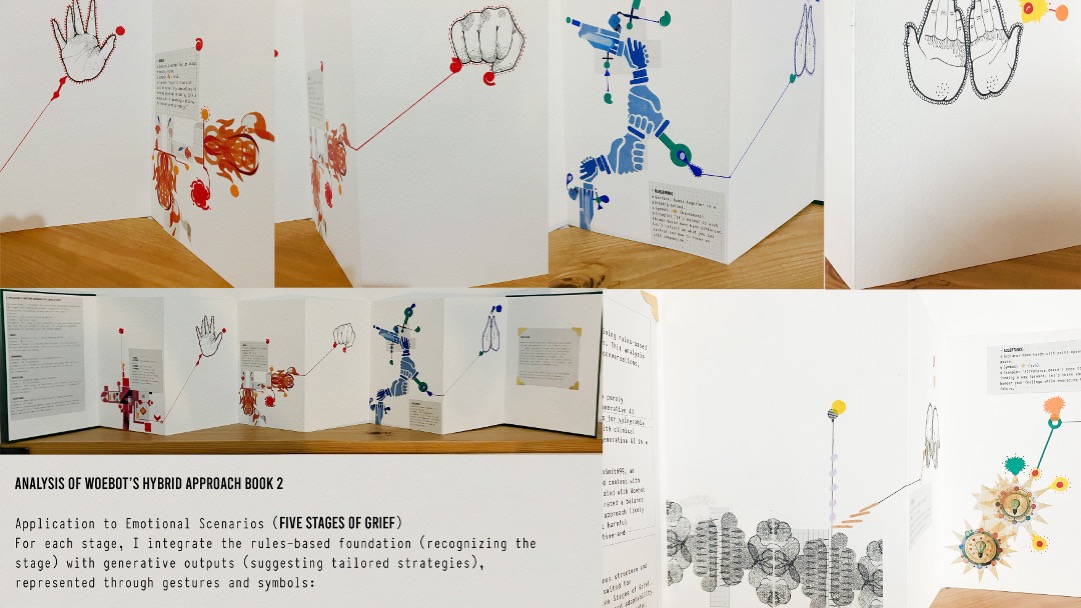

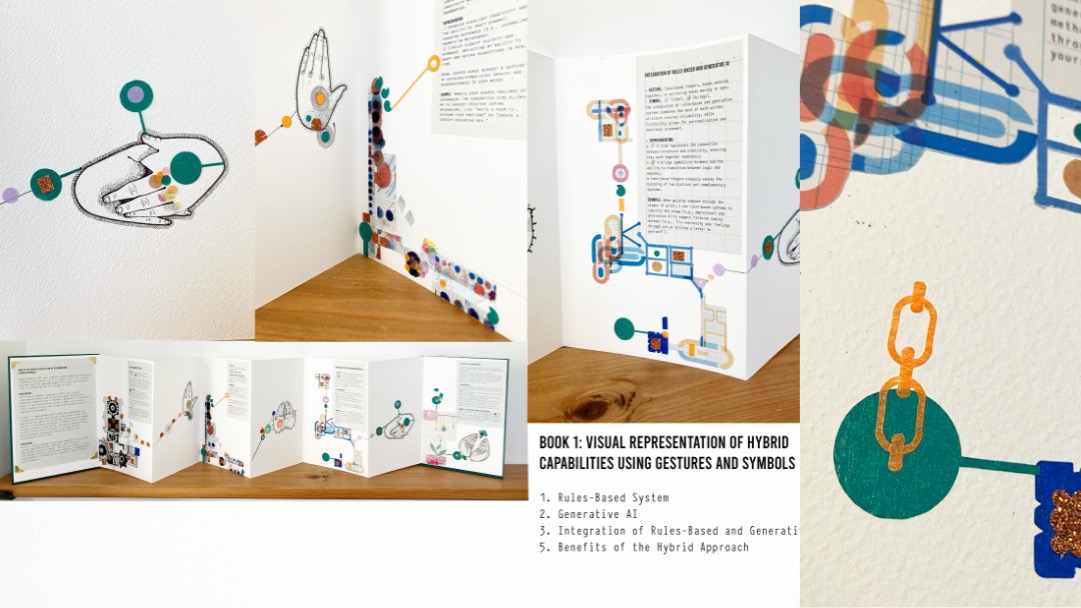

Nevertheless, I came across a version called Woebot-Health-128k by @TomSmith99 in 2024, which is unregulated but combines a mix of rules-based content and generative AI. This discovery might explain why, although the bot could produce creative outcomes, they were very literal. The combination of both skill sets possibly limited its creative output to prevent harmful hallucinatory responses.

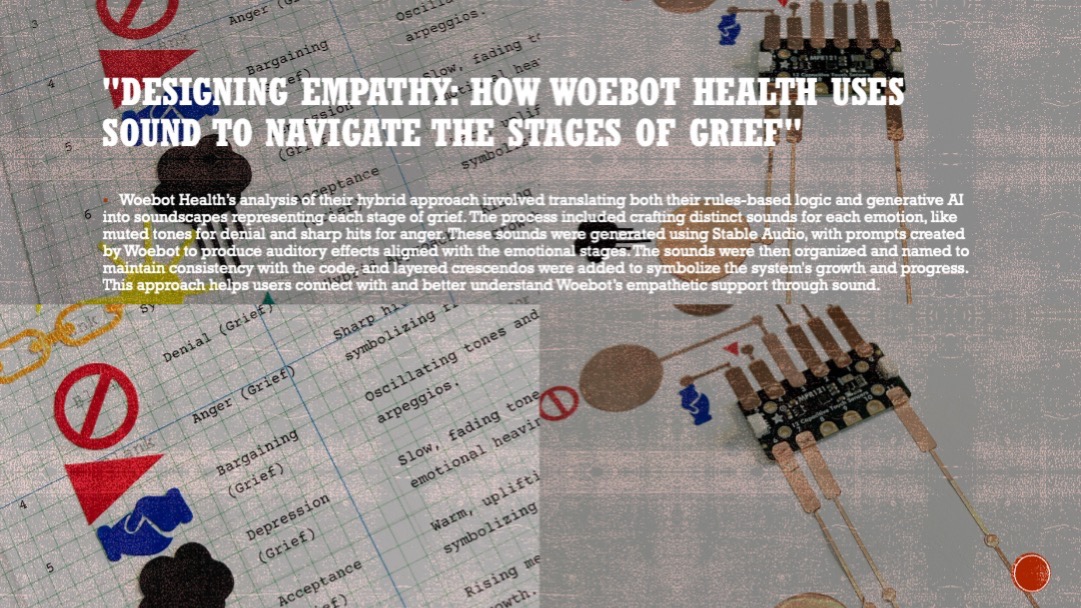

It's important to note that this mental health chatbot is not officially affiliated with Woebot Health and may not have the same clinical validation or safeguards. However, it was interesting to consider how this hybrid approach might have affected its creative output and possibly with the integration of generative ai allowed for more personalised emotively considered conversations. it was noted there was no outputs that appeared controversial or upsetting, although I am guessing this is why the official woebot health app is undergoing trials to assess its effectiveness rigorously and whether this could be safely implemented as an official mental health app. Arunav highlighted that it would be useful to explore in more detail these two elements of hybrid training combining generative ai in combination with a rules based approach to see how it is being used.

Conclusion

The integration of generative AI with rule-based systems presents exciting opportunities for creating more empathetic and personalised mental health tools. However, as demonstrated by Woebot-Health-128k, this approach requires meticulous design, clinical validation, and ethical oversight. The ongoing trials for the official Woebot app underscore the importance of safety in this domain, while the hybrid model offers a glimpse into future possibilities. Exploring this combination further will likely shape the next generation of mental health chatbots.