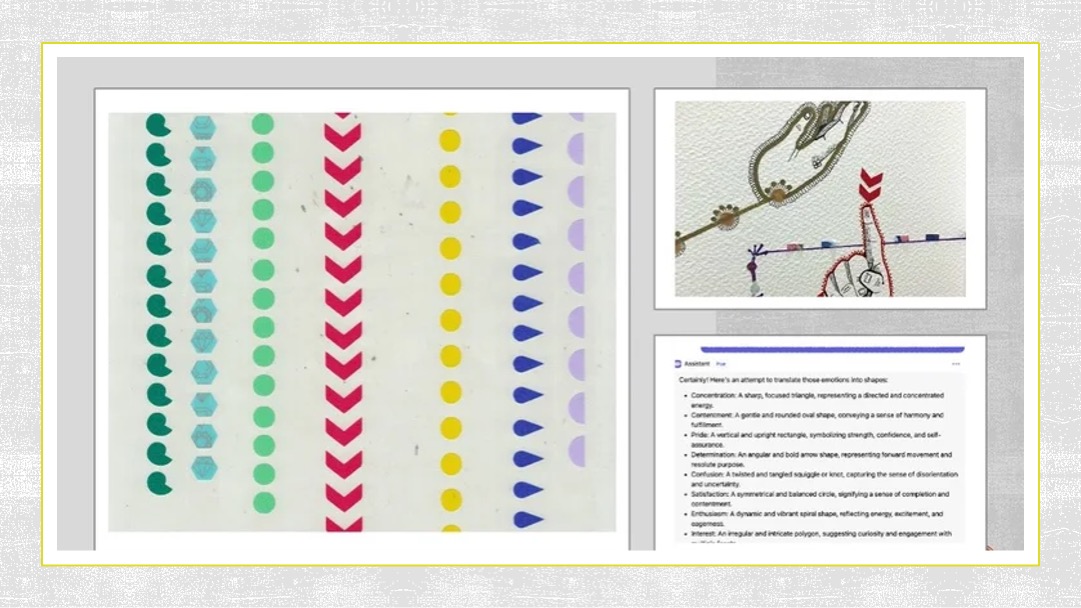

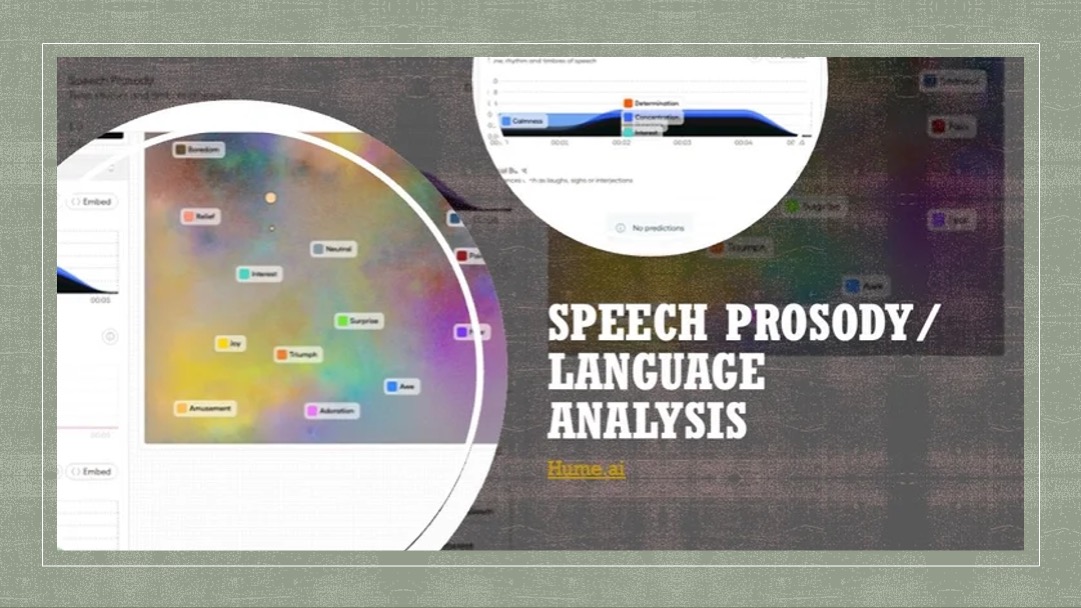

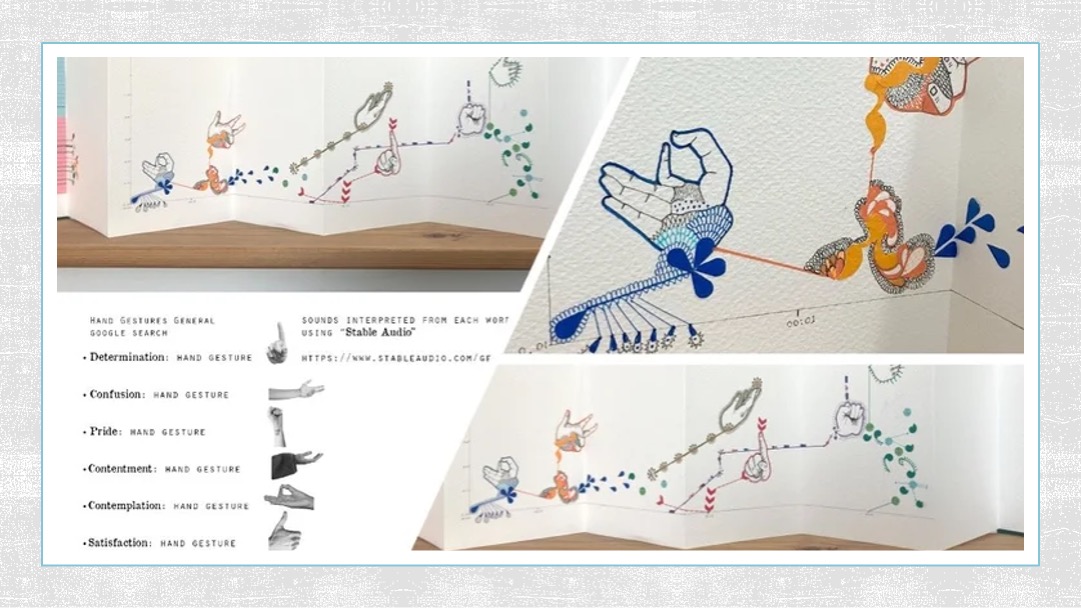

My goal was to find a leading force in developing empathetic AI. Hume.AI emerged as the solution, utilising semantic space theory and machine learning to explore human emotional expression. With Hume.AI as the foundation, I built an emotional multi-modal graph, testing AI's growth in empathy. which included speech prosody analysis, emotive language interpretation, and sound analysis using stable audio prompts. Shaping analysis was conducted with Poe.com. Hume.AI offers comprehensive models and datasets to analyse emotional expression in speech, facial expressions, and reactions.

Project visuals

When I started exploring Hume.AI, I was blown away. Each word, each sentence could be broken down and understood as a separate emotion. It was wild to see how language, the way we speak, could be analyzed and dissected in such a methodical, almost surgical way. But this was the turning point. We, as human beings, like to think of ourselves as unique—that our emotions, the way we feel, are almost otherworldly, beyond explanation. Yet, here we are, creating a virtual entity that can hold conversations that feel almost human. Conversations that can evoke real emotions—sadness, happiness, even moments of connection. And it's all happening through the understanding of words, broken down to their core, creating emotional depth in a way I never expected.