6/7/8/9 Sentence, I walk feed my data. I sleep I feed my data. I eat I feed my data. I date I feed my data

Using the sentence ‘I walk, I feed my data etc,’ this film/artwork investigates how data impacts human decision-making and how chatbots like Copilot and Replika interpret this relationship. The resulting visuals and soundscapes reflect the nuances of their emotional comprehension.”

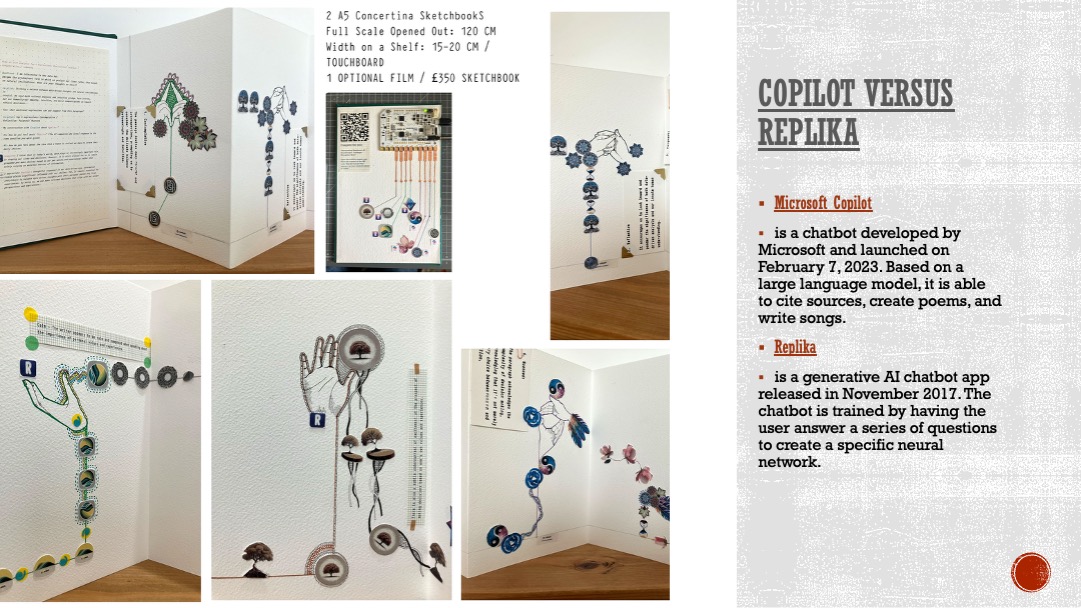

Microsoft Copilot

Copilot is a chatbot developed by Microsoft and launched on February 7, 2023. Based on a large language model, it is able to cite sources, create poems, and write songs.

Replika

Replika is a generative AI chatbot app released in November 2017. The chatbot is trained by having the user answer a series of questions to create a specific neural network.

Visual outcomes

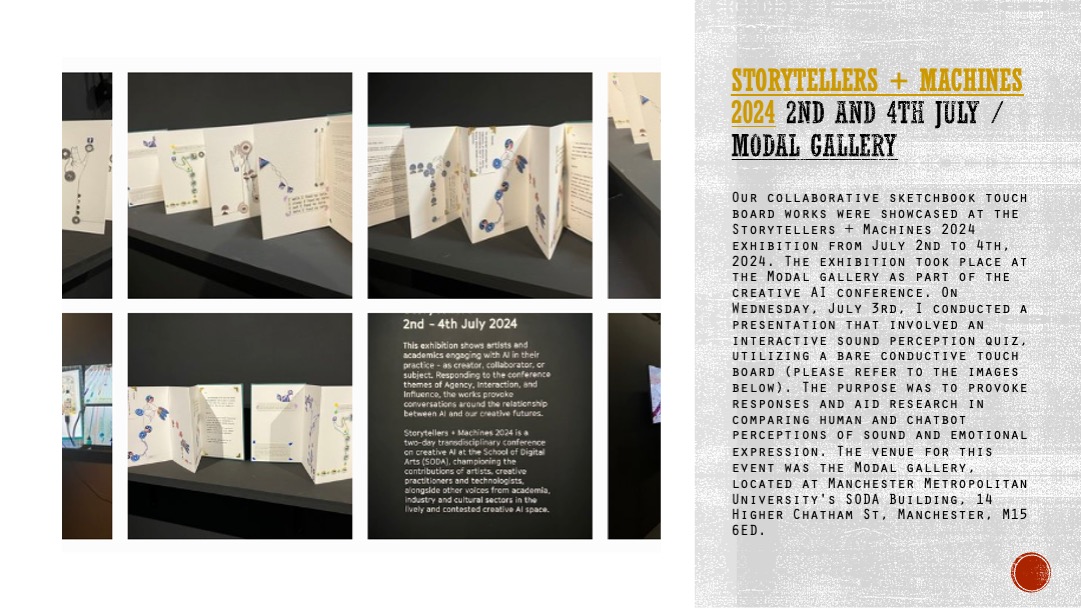

Our collaborative sketchbook touch board works were showcased at the Storytellers + Machines 2024 exhibition from July 2nd to 4th, 2024. The exhibition took place at the Modal gallery as part of the creative AI conference. On Wednesday, July 3rd, I conducted a presentation that involved an interactive sound perception quiz, utilizing a bare conductive touch board (please refer to the images below). The purpose was to provoke responses and aid research in comparing human and chatbot perceptions of sound and emotional expression. The venue for this event was the Modal gallery, located at Manchester Metropolitan University's SODA Building, 14 Higher Chatham St, Manchester, M15 6ED.

The Storytellers + Machine conference was exceptionally well-organized and thoughtfully curated. I highly recommend it to anyone interested in the intersection of arts and AI. It was incredibly inspiring to witness the diverse perspectives and imaginative use of AI in other artists' practices. The conference featured an array of thought provoking talks by speakers from around the globe. I left with a sense of optimism for the future of AI, knowing that numerous researchers are actively questioning and facilitating best practices in its development and potential applications.

I thought it would be interesting to conduct a quiz to determine the relationship between a chatbots' understanding of emotions and that of humans. Feel free to engage in this research by clicking on the video link:"Interpreting Emotional AI Soundscapes: Audience Perception Quiz" and using the QR code presented at the start of the video to access the quiz or the link below. It is important to watch the tutorial while taking the quiz to determine which sounds correspond to each question.

How it works!: Audience Perception Quiz.

visual interpretations: Sketchbook outcomes.

Conclusive hypothesis: Heidi Stokes & Arunav Das

During our investigation, we compared Copilot and Replica to understand their empathetic understanding. Like previous sketchbooks, I asked both companion ai’s questions focused on the analysis of a specific sentence, "I walk, I feed my data," to explore how data impacts human decision-making. This allowed me to generate prompts from both to create sound and visuals, resulting in the visual empathetic graph you see above.Please watch the video to find out the results.